This post describes how we worked with AI on preparing an exhibition “A Journey with AI into Cherkashins’ Art of the 1990s”, which was exhibited at the Vitebsk Art Museum in June-July 2023.

How It All Started

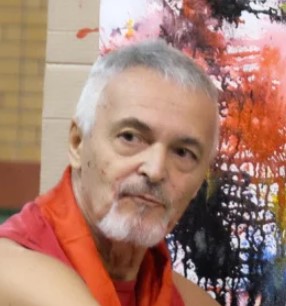

About 10 years ago I was lucky enough to meet Valera and Natasha Cherkashin - famous photo artists, performance artists and just a wonderful couple! Their works are present in many major museums around the world.

Last fall I visited their exhibition “Love in the Epoch of Change” in the Beton center of visual culture. I immediately thought that original works of the Cherkashins made in 1990-s are a great materian to train a generative neural network on. Their original style came from printing then photo (more often from one, but sometimes from several negatives), followed by chemical etching or drawing on top. In the end, the photos were additionally framed with a red marker, crumpled newspapers, etc.

We talked several times with Valera and Natasha about this idea, but for some reason it did not inspire them - there was a fear that the generation of works using AI could lead to the devaluation of art.

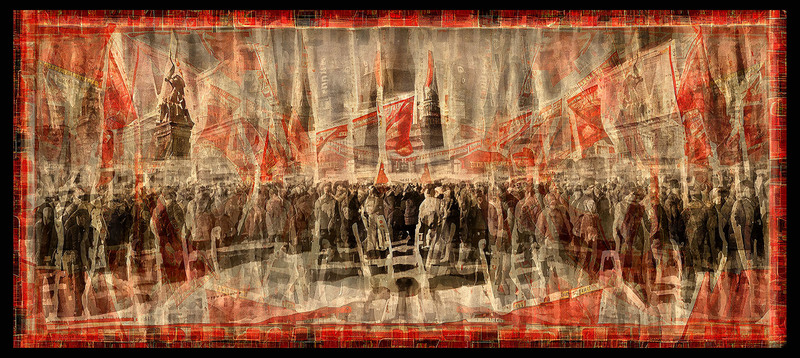

However, I took the liberty, and based on 120 photos taken in the gallery, I trained the Stable Diffusion 1.5 model in the Cherkashins’ style. Here are the first works produced by this neural network:

The first experiments with a neural network trained in the “Cherkashins’ mirages” style, 2023

I sent those photos to the artists, and they were intrigues. It was at this time that Valera and Natasha were offered to make an exhibition as part of the PhotoKrok 2023 Festival in Vitebsk, and the idea of presenting AI Art there seemed attractive. We started to work.

AI Creative Process

So, initially I fine-tuned Stable Diffusion 1.5 neural network on Cherkashins’ works of the 1990s period, and as a result the network learned to generate images in the characteristic style of the Cherkashins’ collage mirages. About 150 works were used for training, manually cropped into square with a resolution of 512x512. As a result, a neural network was obtained that could draw images based on a text query, preserving the individual style of artists.

Since the original works contained mainly architectural monuments of the USSR and people of that era, the neural network best copes with similar requests. Trying to generate something else may be difficult, but sometimes leads to interesting results.

Valera, Natasha, and I got together several times and, using the best prompt engineering techniques, generated about 200 images at a time (1-1.5 hours of work).

Here are some of these images:

|  |  |

|---|

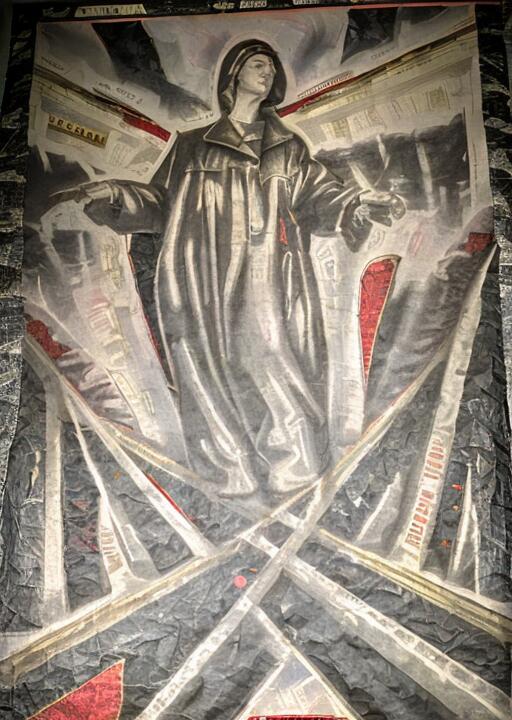

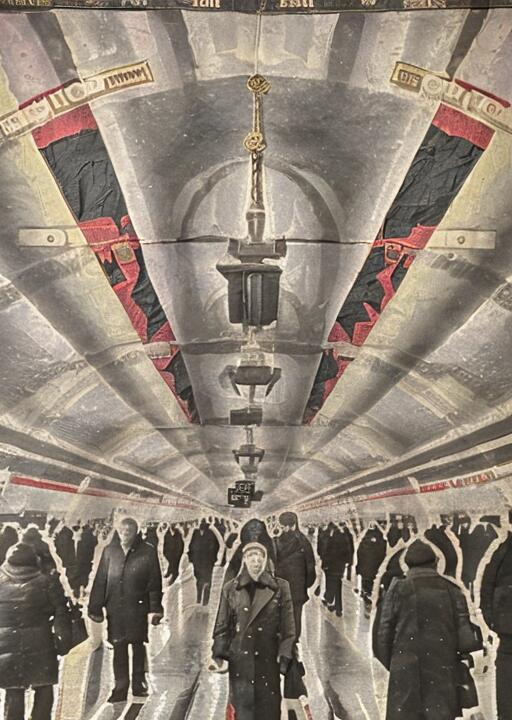

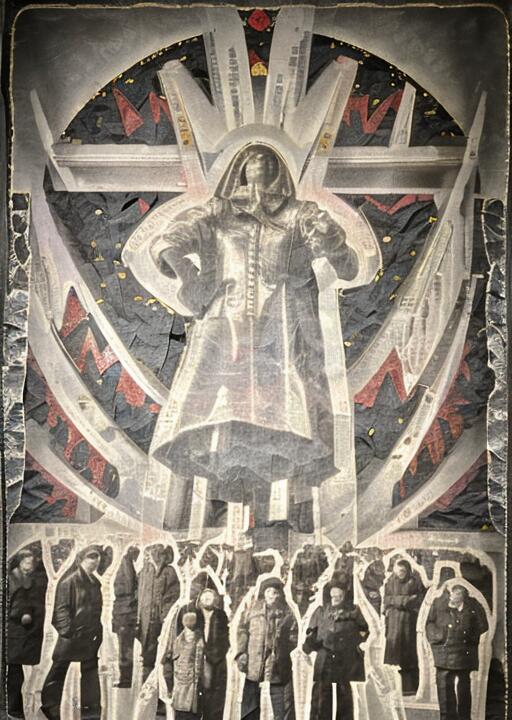

| Madonna of artificial intelligence (the original version of the neural network) | Moscow Metro (the original version of the neural network) | The idol (the original version of the neural network) |

Pay attention to the reflections on some of the images - they appeared due to the fact that the neural network was trained on photos taken on the phone at a real exhibition in the Beton gallery.

Advanced prompt engineering techniques included, for example, a combination of different styles. For example, we tried a combination of the Cherkashins’ style with Van Gogh - the pictures below show the influence of the painting “Starry Night”:

Request: Starry night over red square, by Vincent van Gogh, Cherkashin collage style. These images differ too much stylistically from the Cherkashins’ works, so they were not included in this series, but we then used the technique with the addition of stylization when generating works for the interior of MAI.

Then, at each meeting, out of ~200 works, we carefully selected 2-3 best images, which were then scaled using a Stable Diffusion Upscaler with a special prompt to work out the fine details. After that, we took all scaled images, and selected about 12 works for the exhibition.

Then, Valera and Natasha worked on these images in Photoshop, putting in additional touches, plus their energy and passion. This process included not only manipulating image parameters, but also drawing additional details and color elements, as well as adding fragments of images from other artifacts generated by the neural network (this is clearly visible, for example, in the painting Madonna of Artificial Intelligence, see below). Look at what the original neural network-generated images shown above have turned into:

|  |

|---|

| Madonna of Artificial Intelligence (final version) | The idol (final version) |

You can view all the works presented at the exhibition at the Cherkashins Metropolitan Museum.

Let’s now speculate a little: what role did artificial intelligence play in this process? Is it just a tool, or can we consider it a co-author of the works? There are different points of view on this question.

Of course, neural network cannot create a work of art by itself - it only makes a draft, a "matrix" - which we can then breathe life into, "spiritualize" it, to make it into a finished work. But we are very impressed with the capabilities of AI (as we call it, "Ivan Ivanych"). You could say it has even given some new momentum to our art. |

Valera Cherkashin |

Indeed, we can consider AI as a tool of a digital artist, which we first train and then use to generate random combinations of images, just as a modern artist is sometimes inspired by accidentally spraying paints on canvas.

However, looking at the images obtained above, it is difficult discard the fact that the image created by the neural network, even before final “spiritualization”, already contains a significant part of the aesthetics and even the message. And although such an image was created by purely “insentient” neural network, it does not devalue its artistic message and value.

Dmitry Soshnikov | Drawing with the help of a neural network resembles how the students of a great artist, having learned his style, create similar works. For example, a street artist can create portraits in the style of Van Gogh, and in this case we will be inclined to consider such an artist as the author of the work, because he was the one to put paint on canvas. Similarly, the neural network "learns" Van Gogh's style from his works, and then creates some kind of random (or not so random) combination in this style. If AI artist puts in some meaning by using a text prompt, and then selects the resulting works, then it seems logical to consider both the person and the AI as co-authors of the final piece. |

Another argument is the emotional response that we have when viewing newly generated pictures. The generation process itself is very exciting, because each time we are looking forward to how AI interprets our request in an unexpected way.

Generating works is like traveling through an art gallery, only this gallery is potentially infinite and adaptive, i.e. it can show us an unlimited number of works that meet our textual requests. Depending on how detailed the request is, we can delegate more or less of the creative work to the AI.

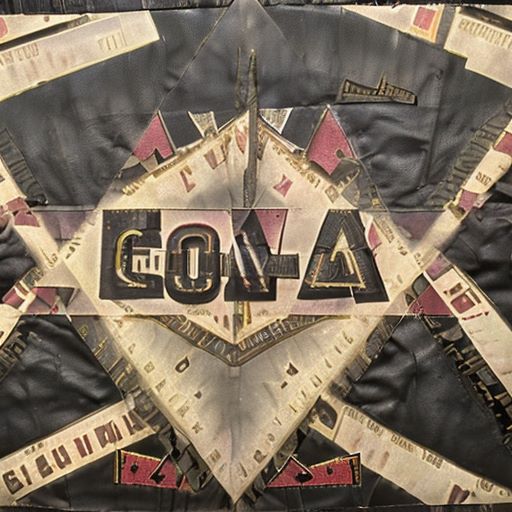

| This work illustrates that AI can generate unexpected ideas. Initially, we wanted to draw Egyptian Giza pyramids in the Cherkashin style, but as a result we got СОЛА logo, which was not what we expected. However, we gladly included this work in the exhibition, although the idea was significantly different from the one we originally wanted to convey. |

Of course, regarding authorship, everything is not so simple, since from a legal point of view the neural network is not a legal entity and cannot be a copyright owner. For example, major scientific publishers (Springer, etc.) prohibit specifying generative AI among paper co-authors - primarily because the neural network cannot be responsible for what it writes. At the same time, the use of such tools when writing scientific papers is in no way prohibited.

Whether or not to specify a neural network as co-author of your work is your decision (at least for now). The good news is that the neural network will not be offended either way.

Empathy and “Thirst for Creativity”

An important point that distinguishes a neural network from a person is that the former has no emotions and no ability to empathize, which is why a neural network cannot distinguish bad piece of art from a good one (i.e., the one that causes emotions). Therefore, in order to create works that touch their audience, the process of selecting the best images from all that was generated is critical. This process can somehow be automated by crowdsourcing, but the involvement of a person at the selection stage is necessary. Let me reemphasize that in our case we selected 12 works for the exhibition out of 500-600.

The second important aspect is related to goals and desires, which only people have. Only a person has a desire for creativity and inherent need to express certain thoughts and feelings in the format of artistic artifacts. To fully automate the creation of works by a neural network, it is necessary to get this initial sparkle for creativity (in fact, a text request for image generation) from somewhere. Of course, we can use a random number generator to choose from a variety of topics, but there is a high probability that the value of such random topic for the audience would be lower, comparing to the works that show problems and ideas that real people care about and want to express.

These two reasons lead to the fact that the creation of emotionally and intellectually engaging pieces of art is not yet possible without human participation. However, the joint venture of a person and artificial intelligence opens up many potentially interesting ways of developing contemporary art, which we still have to fully explore…